The AIs Don’t Agree

I built a tool to ask Google, Perplexity, and ChatGPT the same question — and now I don’t trust any of them.

I built Hyperchat because I hate waiting.

Waiting for one AI to answer. Then trying another. Then Googling it anyway.

I just wanted to ask once — and get all my AIs to respond at the same time.

So I built it.

Hyperchat is a simple macOS app that loads Google, Perplexity, and ChatGPT side by side. Ask once, get three simultaneous answers.1

The idea was simple: combine Google’s speed, Perplexity’s depth, and ChatGPT’s reasoning.

At least, that’s what I thought I was building.

What I actually built was something stranger — and a little unsettling:

an AI trust erosion machine.

ChatGPT, Google and Perplexity contradict each other — a lot. There is no pattern.

I expected some kind of logic.

Maybe Google would be best for some things. Perplexity and ChatGPT for others.

But no. So far, the inconsistencies have been completely unpredictable.

It feels genuinely random.

Here’s one example:

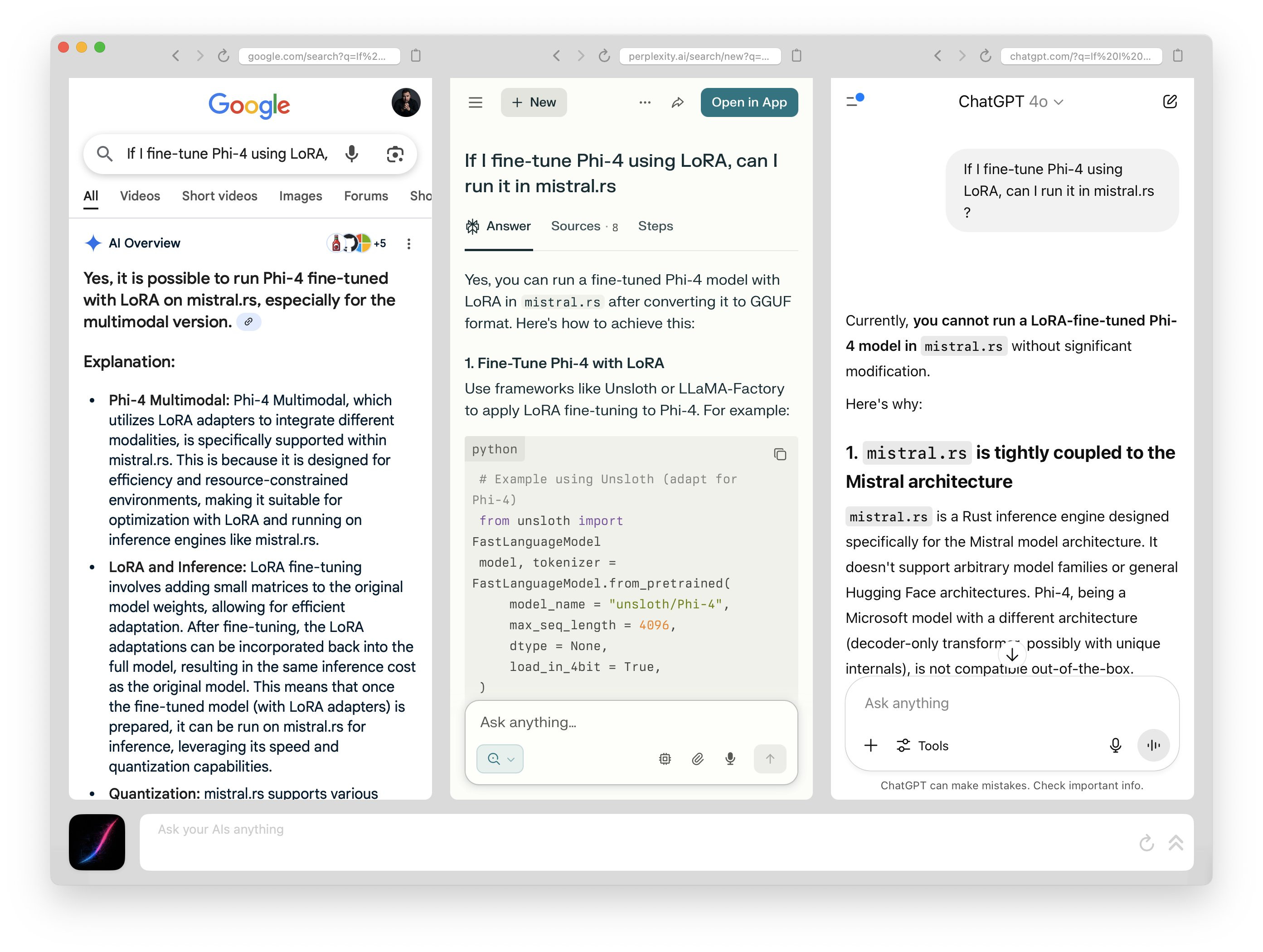

If I fine-tune Phi-4 using LoRA, can I run it in mistral.rs?

This isn’t ambiguous. It’s not subjective.

It’s a simple technical question. Yes or no.

But the answers conflicted — confidently.

Each system said something different.

And ChatGPT, the slowest of the three (which made me trust it more), was completely wrong.

But once you see it, you can’t unsee it.

The AIs screw up in places you don’t expect — and that’s the real trust problem.

It’s not just hallucinations. It’s the randomness.

The more I use Hyperchat,

the less I trust LLMs.

Hyperchat changed how I use AI.

I no longer trust any single system in isolation.

I want to see what each one says before I believe anything.

And the speed differences make that feel natural.

Google answers instantly. Perplexity usually follows fast. ChatGPT brings up the rear.

It’s not consistent, but it creates a rhythm.

I’ve found myself reading them in layers — Google first, then Perplexity, then ChatGPT — escalating as I search for depth or clarity.

Hyperchat turns that into a single, seamless flow.

So yes, I built it because I hate waiting.

But somewhere along the way, what started as a speed hack… became a trust filter.

As promised, I built the whole thing using AI.

The first prototype took a day.

Five weeks to polish it (delayed by a family vacation).

Another week wrestling with Apple’s notarization pipeline.

But now?

It’s live.

Hyperchat for macOS is available now.

Ask once. See everything. Decide for yourself.

Hyperchat also supports Claude, but it’s still quite buggy.

I’ve liked using TypingMind for this, I can add all the LLM’s I want even from OpenRouter and see them side by side. Have you tested it?

Hyperchat is an interesting idea… I always like to compare and evaluate the quality of AI output so this is 💯💯